The Coming Analog Age

Perhaps the next step in AI software evolution will be preceded by a hardware revolution.

When the first computers were designed, attempts were made to electronically represent the decimal number system used by most human cultures (because we use our 10 fingers to count). The word digit, and therefore digital, actually comes from the Latin digitus, meaning finger. However, assigning 10 numbers to 10 different voltage or current values is very error-prone due to the continuous nature of these values, interference effects, or the temperature dependence of electronic resistors. It soon became clear that a generally programmable electronic machine capable of precise computation could only operate on the basis of discrete values, but 10 different truly discrete states do not exist in an electronic system. However, the realization of 2 discrete binary states "off" (0) and "on" (1) is relatively simple. Computers based on the binary system quickly caught on and are now so ubiquitous that our era is often referred to as the digital age.

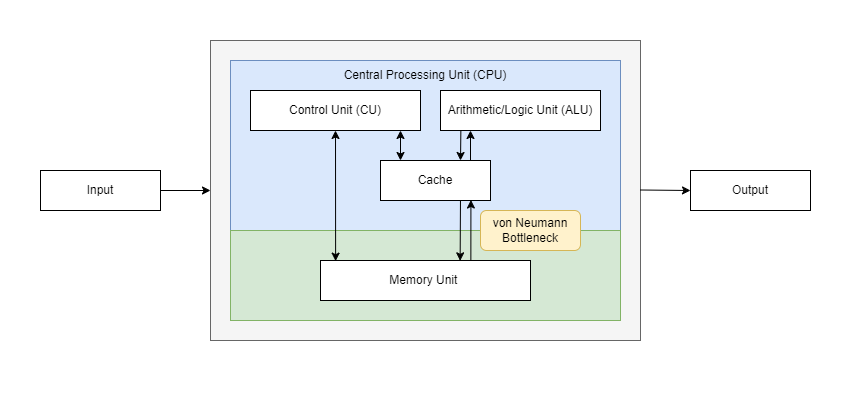

Digital computers were initially designed and optimized to convert decimal numbers to binary numbers, then perform calculations, store the (intermediate) results of those calculations, and output them. For this purpose, the Hungarian-American mathematician John von Neumann proposed a computer architecture that is still in use today. According to the von Neumann architecture, a computer consists of separate components: a control unit, an arithmetic-logic unit (processor), a memory unit, an input/output unit, and a bus system (communication between these components). In the second half of the 20th century, von Neumann computers were optimized for tasks that we humans find difficult (such as calculating the square root of 463,238). It was not until the advent of artificial intelligence (AI) that computers began to learn skills that we find easy (such as distinguishing between pictures of apples and pears).

Since the early days of digital computing, we have instructed computers with machine-understandable sets of rules (programs); AI, on the other hand, enables computers to infer rules independently from the data we provide. This is a conceptually different way of interacting with computers. AI based on neural networks is first trained on a large data set; to stay with the example above, you could feed in labeled images of apples and pears. In a deep neural network consisting of an arbitrary number of layers, the weights between the individual nodes/neurons are optimized so that apples are ultimately recognized as apples and pears as pears. If the trained AI is then presented with unknown images of apples and pears, it will correctly classify them, depending on the success of the training.

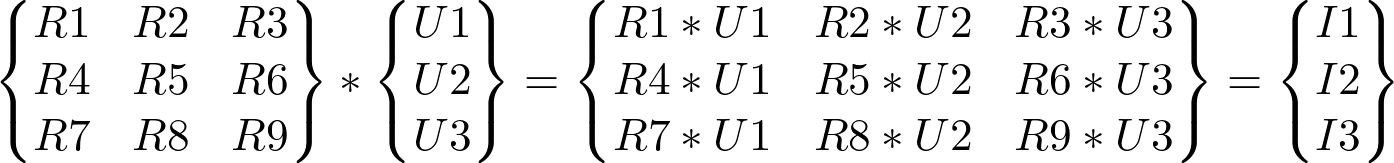

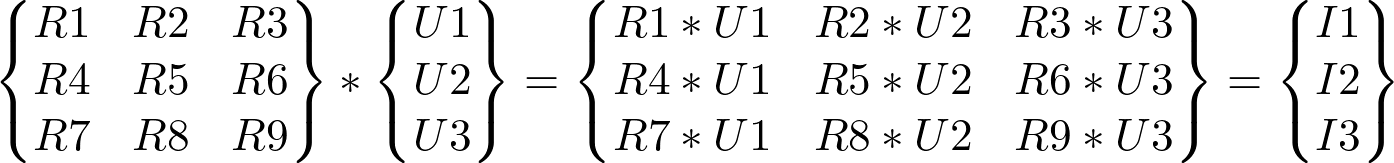

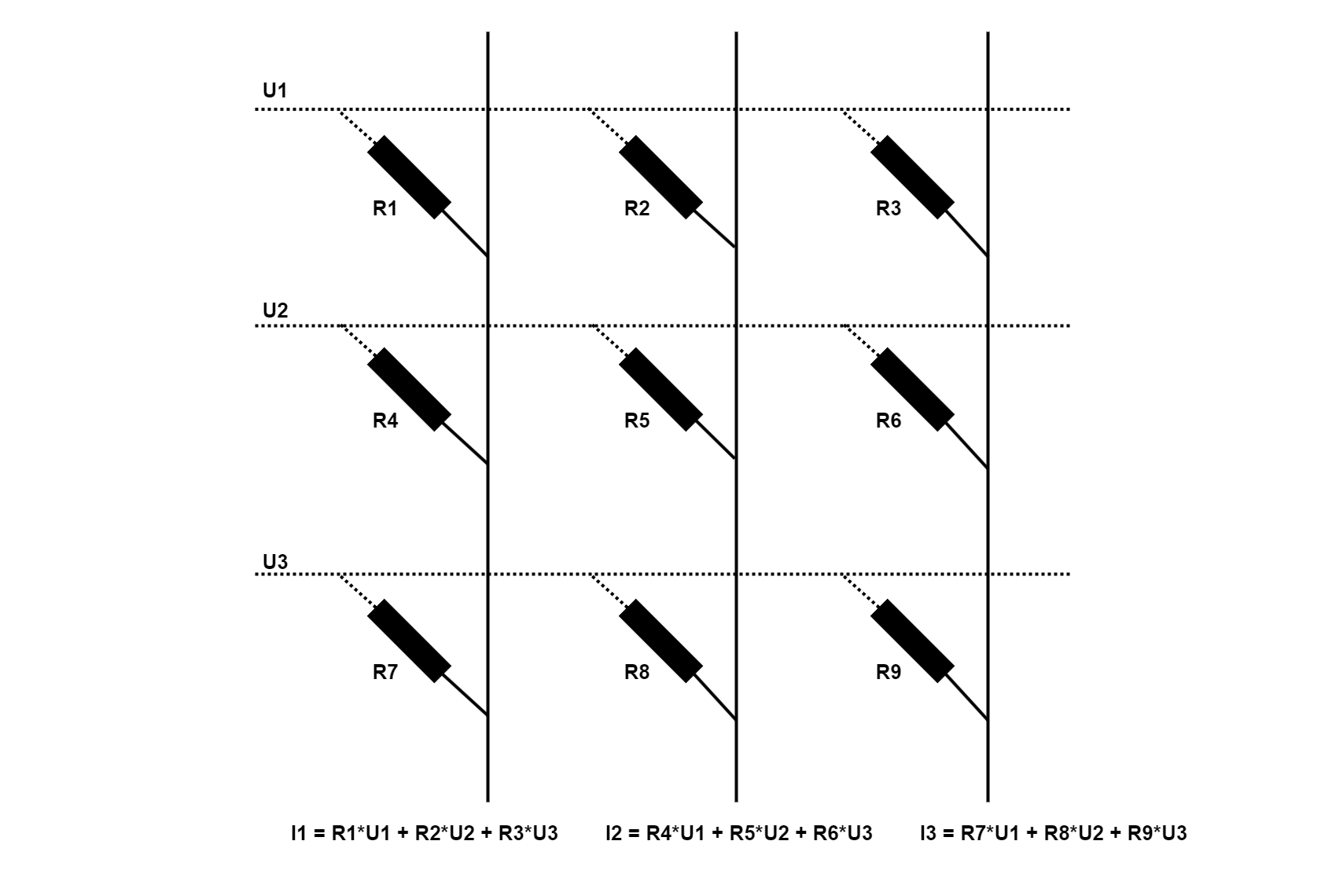

Neural network nodes and weights can be mapped to matrices, and even the new Large Language Models (LLMs), such as OpenAI's GPT, are ultimately based on such sequential matrix operations:

Broken down to the most basic operations, these are multiplication and addition. To perform these operations on numeric values, the data must first be transferred from memory (DRAM) to the arithmetic/logic unit (ALU). For example, if a number is stored as a 64-bit float (see my article on IEEE 754), 1-2nJ of energy is required for the transfer. In addition, there is a limit to the amount of data that can be simultaneously transferred between the processor cache and memory, known as the von Neumann bottleneck.

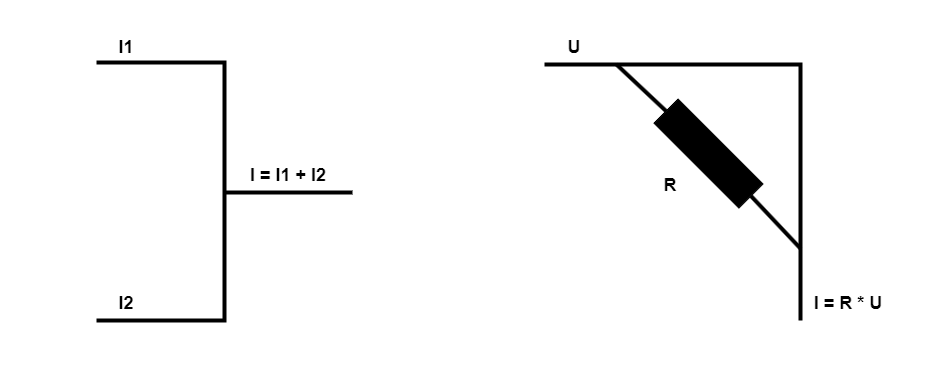

In principle, multiplication and addition can be performed in electronic circuits by exploiting Kirchhoff's circuit laws. This type of analog computation results in much higher power efficiency and can be performed directly in memory, avoiding the von Neumann bottleneck.

This raises the question of whether a conceptually new kind of software requires a conceptually different kind of computer. So far, AI has been primarily a software revolution, but there are increasing signs that the next step in the evolution of AI will have to be preceded by a hardware revolution.

Using Kirchhoff's circuit laws as suggested above, we leave the realm of digital/binary computing and enter an analog world. In an analog signal (from the ancient Greek analogos meaning "similar"), the signal/data being processed is imitated. For example, in an analog record, the sound waves are mimicked by tiny bumps on the surface, which can then be read by the record player. For AI, we would mimic the neurons and weights with programmable resistors.

There are many possible ways to implement programmable resistors, of which phase-change memory is considered the most mature technology to date. In this technology, an electrical pulse changes the state of the device between amorphous (higher resistance) and crystalline (lower resistance). The transition between the two states is continuous and therefore analog. This allows the weights of a previously trained neural network to be mapped into a circuit on which AI can run. IBM published groundbreaking research in Nature in August 2023 showing that AI for speech recognition can run 14 times more power-efficiently on an analog chip with phase-change memory than on a digital computer chip.

Today, AI models are constantly being optimized, so a neural network printed in silicon may not seem very appealing right now. But in the future, mature AI applications could run on the edge of analog chips, revolutionizing everything from robotics to aerospace.